With the great success that came with uploading of my recent work with the Kinect, I’ve decided to also put up my Bachelor’s thesis (which was actually available but well hidden out there already).

This work is however not based on a widely available system as the Kinect, but the general idea and software should be applicable to many generic setups including a standard 2D laser scanner with adequate scan rate. The project goal was the development of a plug-in for the Mobotware system used at the university, and the final result proved satisfactory in fulfilling the specified demands.

First part of the abstract:

Abstract

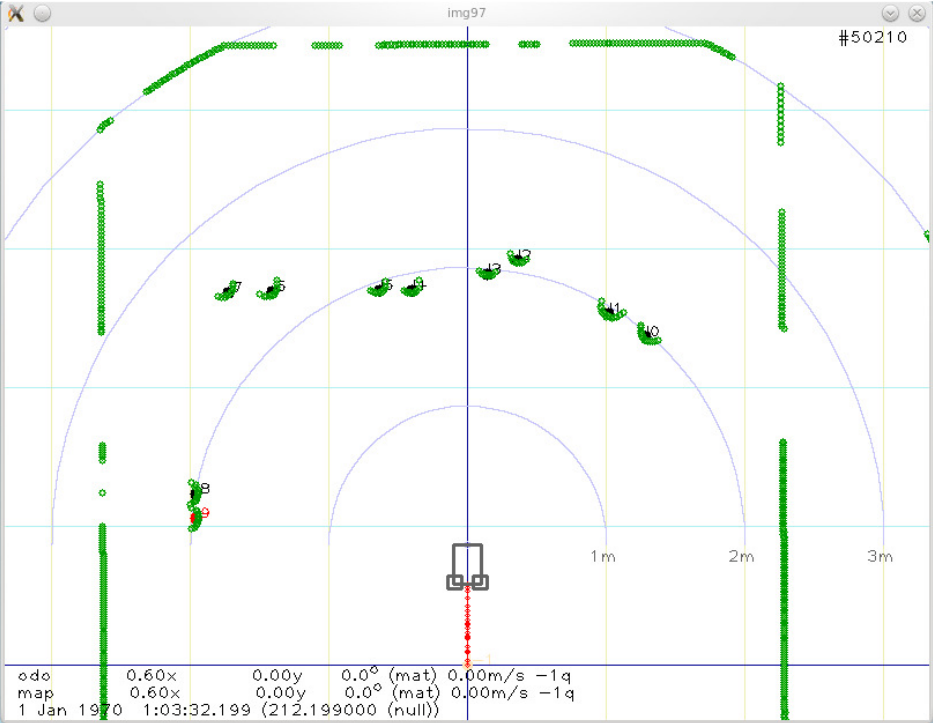

The goal of this project has been to develop a software solution capable of detecting

and tracking humans in the immediate environment of mobile robots. Limitations are

imposed: only a single laser scanner (a.k.a. range finder) may be used and the final

product must be compatible with the Mobotware framework. In compliance with

these demands, the chosen solution has been created as a plugin running directly in

the Mobotware framework, analyzing data on shapes in the environment to separate

human legs from static objects. (…)

Detection and Tracking of People and Moving Objects, Mikkel Viager 2011

Many of the documented experiments are conducted in a virtual environment, making it easier to spot bugs during the software development process. In the final part of the report, a closer examination of real world applications is carried out to determine which factors might influence the robustness and thereby usability of the scanner and algorithm results. As explained in the report, a relatively high scan rate is needed to track moving human legs, whereas each single scan can be analyzed to filter and detect objects of a certain desired shape.

Even though this specific implementation of the algorithm might not be directly implementable on any control system, the considerations behind it are definitely valid. Thus, I don’t think this thesis will be of as much use to others as my Analysis of the Kinect, but that factor alone has not made me change my mind about uploading it for possible use as a reference. Below is the embedded version of my thesis, which can also be found on my profile over at scribd.com.

Detection and Tracking of People and Moving Objects

Use and refer to my thesis if you find it useful, and please leave a comment (possibly including a link to your own work) if you do =)

what is the difference between foggy video sequences and low resolution video sequences?

Why are we going for Single Moving object detection instead of multiple- justify Technically?

I agree that it might be advantageous to look for combined sets of legs and track these together instead of individually. Don’t know if this could provide better estimates of what objects are actually human legs.

However, in such an implementation it should also be considered that a pair of legs may be oriented such that one is hidden behind the other.

so long time hah 😀

I finish this project, take a look:

http://www.youtube.com/watch?v=r3DLVdcBmZg

Looking good. =)

Are you using the Kinect data to correct odometry values, or are you running purely on odometry for positioning?

Also, have you experienced problems with precision when using the stock Kinect foot as mounting structure? (I prefer to mount the sensor head directly, as the foot connection is not very strong or precise).

It would be great.

Thanks.

In my mind have a confusion:

Because Kinect provide us depth image with distance value. It may consider the floor as obstacle; so if we use OpenCV to convert to grayscale image, it may detect wrongly.

I’ve never used point-cloud before, so i don’t know whether it can distinguish FLOOR and OBJECTS.

Do you have any suggestion for me in this case.?

The idea would be to look for jumps in distance values, which would indicate an object standing out from the floor. Either by looking at the values in the 3D dot field, or using openCV to analyse the edges the same jumps will make in a greyscale image.

Another smart way would be to locate and filter out the floor completely, leaving only obstacles. Two of my fellow students have just done a thesis where they use this method, and I will of course link to it here if they decide to put it online.

Thanks for sharing.

I have a question, if i use Kinect to avoid obstacle, do i need to use point cloud?

That depends on the task you need to solve. The Kinect provides a depth image where each pixel has a distance value, which makes it possible to either analyse the data as a point cloud, or convert it to a grayscale image and do obstacle detection with opencv.