During 3 weeks of March this year I had some time to spare for a University project of my own choosing. As i have been eager to try out the Microsoft Kinect sensor (for anything but its intended purpose), it was an obvious choice for my project.

In my hunt for a more specific subject involving the Kinect, I became increasingly annoyed with the lack of specifications on sensor-usage of the device. Soon I realized that even a very basic datasheet could prove to be very useful for anyone wanting to get involved with the Kinect.

With the consent of the professors at the DTU Institute of Automation and Control I decided to have my project focus on the making of a datasheet, and evaluating applicability of the Kinect sensor within the field of mobile robots.

This is the abstract of the final report:

Abstract

“Through this project, a data sheet containing more detailed specifications on the pre-

cision of the Kinect, than are available from official sources, has been created. Fur-

thermore, an evaluation of several important aspects when considering the use of

Kinect for mobile robots has been conducted, making it possible to conclude that the

Kinect is to be considered as a very viable choice for use with mobile robots.”

Analysis of Kinect for mobile robots, Mikkel Viager 2011

Tests were carried out using a customized version of the open source linux driver for the Kinect, running on a custom slackware image called “Mobotware” developed and used for all robotic platforms at our institute. From the experiment results I created several graphs and a specification table, as is normally seen for many other datasheets.

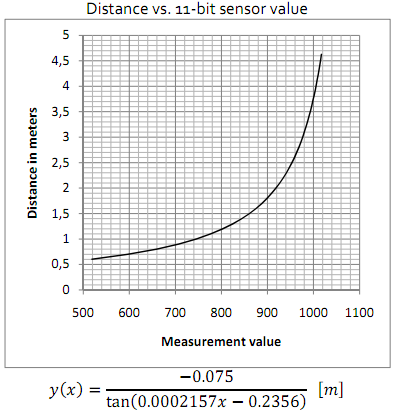

In my following Kinect projects I have made good use of the below graph and equation, for assessing expected point cloud resolutions at different distances.

The values are of course only an approximation based on measurement data from a specific Kinect unit, but have proven to be a viable estimate for general use with other Kinects as well.

Whereas small adjustments should be made for this equation to fit each individual Kinect, the graph does still provide a good overview of what sensor values to expect. Most recently i have used this to decide how to best fit all measurements in a certain distance range into an 8-bit grey scale png image, for further analysis using OpenCV functions.

The final report was well received and is currently used as a reference for our ongoing Kinect projects, which gave rise to the idea of posting the work online for others to benefit from.

As there are no other Kinect datasheets out there (at least I haven’t been able to find any yet), my report is currently the no. 1 search result on searches for “Kinect datasheet” on google.com.

I have embedded the entire report below, scrolled down to the datasheet which is found on page 27. The entire report is there, if you are interested in reading the methods and argumentation behind the datasheet results.

The report can also be found on scribd.com Here.

Analysis of Kinect for Mobile Robots (unofficial Kinect data sheet on page 27)

[wpdm_file id=1 template=”bluebox” ]

I hope that my results can be of help to others interested in the potential of the Kinect, at least until we are provided with a proper official datasheet to use instead.

If you found my datasheet useful for your own Kinect project, I’d be very interested in knowing about it! Please leave a comment describing what you are working on, how you used my datasheet and perhaps how you found it =)

Update:

This Kinect Fact sheet released by Microsoft seems to be the closest we get to official information…

If you want the manufacturers details on the key components the Kinect is made of, links to these datasheets are collected over at OpenKinect.org’s Wiki

Update 2 (June 16th 2011)

Today this post was promoted as a news item on the official DTU Electrical Engineering institute’s homepage =)

Update 3 (March 14th 2012)

Added link to a downloadable pdf version of the report, as per several requests.

Hi,

I’m part of a 5 students team, working on an indoor maping project.

The project main point is to map an all indoor bulding, flat or others indoor places, creating 3D models for video games (at first) but can be used too for others things.

Using kinect as hardware part for mapping many rooms, and then creating a software which can modelize one big mesh from all the little one created before.

What are you thinking about this project, did you think it can be a good idea ?

Is it possible to do it ?

Did you know others similar projects ?

If you have any tracks that we could follow, please answer this post.

Hi,

Sounds like a fun project.

I guess you want to do something similar to Kintinuous and maybe you could take a look at some of the tools used for smoothing models in e.g. Skanect.

My suggestion would be to start looking into using ROS to obtain your data, and then some of the awesome features of PCL to treat and simplify the data to less dense geometry structures. Maybe something like this example.

Good luck with your project!

Thanks for all this informations, I’ll start working and checking on this. 🙂

We already know off Kintinuous, our project is something similar to this, or like Matterport.

Thanks again ! !

Thank for your quick response.

Through my second query i wanted to point out that for resolution measurement i think we need to measure it against real values,for example by measuring the distance between scales on a measurement tape(say having 3mm space between them) & comparing the scan result against their true value to decide resolution capability of device rather than deciding it purely based on values from raw point cloud.What are your views about this?

I agree.

The raw best case values are not of much use in evaluating actual operation performance and capabilities. It would be interesting and useful to experiment with viewing highly detailed objects and determine how small depth detail features can be considered as reliably detectable and distinguishable.

Hi,

Thanks a lot for your efforts.

I have two queries:

1.Disparity range is normally mentioned to be 11-bits but there is also information regarding it to be 13 bits(please follow the link below):

http://social.msdn.microsoft.com/Forums/en-US/kinectsdknuiapi/thread/3fe21ce5-4b75-4b31-b73d-2ff48adfdf52/

2.Spatial resolution along X & Y as reproted by prime-sense & observed by you experimentally is ~3mm @2m but whether ‘definition’ of spatial resolution described in your paper is useful,as i cannot distinguish between 2 points spaced 3mm apart at 2m distance from sensor in the point cloud(experimetally observed)?

Hi,

I’m glad that you find it useful.

For your queries:

1. What you are referring to seems to be related to the Kinect for Windows. All of my works was done with the original Kinect for Xbox360 with the OpenNI driver in Linux. I have not looked into the official SDK released by Microsoft, but i bet the data is formatted very differently than the raw value measurements I had with the early OpenNI driver.

Thus, I cannot help you with the range data format from the Windows SDK. Sorry.

2. I will actually be looking into this in my current project. Currently, I am not sure exactly how the PCL handles the Kinect data and the resolution. The experimental conclusion was based on observation of the raw data resolution, which may be filtered in some way in the Windows SDK. I am not sure how much filtering is done to the raw depth data before it can be reached from the Windows SDK, but it is very likely that it has been put through some smoothing.

Hi Mivia,

thank you very much for your quick and detailed response! This explains a lot! I wonder why there are still 11 bits used for encoding the distance. If the realistic range is really only up to approximately 1023 (not even taking into account a reasonable lower cap) there is no point on wasting one bit for not needed information.

By the way, I am very curious how you discovered this function as I’ve not come across it anywhere else so far and also did not find a corresponding documentation by PrimeSense or rather OpenNI (this makes sense as OpenNI is just the Natural Interaction framework). It certainly is very valuable to have!

Best Regards,

Chris

Hi Chris, I am glad that you find my answer satisfactory.

Regarding the function, I approximated it by analyzing my experiment results, after the advising professor on the project suggested that it might be somewhat similar to the corresponding function for stereo camera setups.

I just realized that I didn’t include the raw experiment data in the report. I will consider to add it in an upcoming revised version.

But if you do a representative batch of tests and obtain sensor values for a number of distances, you should be able to recreate a similar approximated function. Actually, I didn’t look into how much, or which parameters, the function differs from one Kinect device to another.

Hi Mivia,

that sounds reasonable. It might be interesting to find out about the function for different devices. Thank you very much again for the helpful answers! Good luck with your studies!

Regards,

Chris

Hi Mivia,

I just dug through your paper and very much liked it! I thought it is a great compilation of helpful resources about the Kinect.

However, when going into the details, I noticed a problem with your suggested transformation function for the sensor value (N) to the depth in meters. In your paper on page 17 you state that the sensor value is a code of 11 bits length (which I can confirm from Kinect programming projects) in the interval of 0-2048 respectively (that should be 2047 to be exact, 2 ^ 11 – 1).

The transformation as described on pp. 17 and 18 and plotted as on pp.18 and 27 is erroneous for values up to that limit, as you can easily see when plotting the actual function, e.g. on Wolfram Alpha:

http://www.wolframalpha.com/input/?i=Plot%5B-0.075+%2F+%28tan%280.0002157x-0.2356%29%29%2C%7Bx%2C400%2C2048%7D%5D . Maybe I missed something?

Best Regards,

Chris

Hi Chris,

Glad that you like my paper!

Regarding the interval I agree that it should of course be 0-2047 for 11 bits. My mistake. I’ll see if sribd.com has an option for putting up revised versions of documents.

We will, however, never come even close to the higher values as a result of the function properties, which you also mention.

The distance as a function of measurement value is indeed as you plot it. This is, however, a very intended behavior, which I should have pointed out in the paper by defining an interval of usability.

Unfortunately we have no specifications available from the original developers and thus no maximum operational range. That graph is a very good tool for estimating how the precision of measured distance rapidly decays for L > ~5m.

We can conclude that we will never receive sensor values from the erroneous end of the function. A logical evaluation of the physical requirements to achieve such a value concludes that we will need to measure either an infinite or negative distance in order to achieve any measurement value greater than approximately ~1092.

Thank you for pointing this out, as it is unfortunately not stated very clearly in the paper. I will see if i can add a more fulfilling description.

Actually… since we can’t trust distance measurements very well for long distances anyway, a logical limit to set would be a truncation to 10 bit numbers.

With a maximum measurement value of 1023, and a corresponding distance of very close to 5 meters, I would trust the sensor to deliver reasonable and robust results within this range.

http://www.wolframalpha.com/input/?i=Plot%5B-0.075+%2F+%28tan%280.0002157x-0.2356%29%29%2C{x%2C500%2C1023}]

Then, of course, comes the problem of deciding the lower limit =)

Awsome ^^

I’ve just finished compute distance using two different webcam

Here’s my video: http://www.youtube.com/watch?v=GAOQ6cFdYzM

Now i’m going to buy a Kinect to do something like that for mobile robot. I consider about Kinect’s rapid process 🙁

This one will be very useful for me. Thanks

May i drop a line here when i have some problem ? ^^

What spacing do you have between the cameras?

And what is the precision of the distance measurements?

It could be interesting to know how the precision fall of with distance and compare it with the Kinect.

You are of course welcome to throw questions. =)

In case I cannot come up with an answer, maybe someone of the other visitors can be of help.

Ya,

Spacing between 2 cameras is 6cm

The precision of the distance measurements is about 20 – 90 cm

Sure, if we use better camera and increase the spacing, it will detect longer.

I’ve just bought Kinect 😀

Now i consider chosing KinectSDK or OpenNI&NITE for programming.

Hi, I can’t find anywhere its wheight!Can you help me??..I’m going crazy!Thank u!

I finally got around doing a simple measurement of the weight of the Kinect unit itself.

One Kinect weighs in at ~0.45 kg. (not including cords).

If you are in need of a light weight setup i guess you could reduce the length of the otherwise long cables.

The power-cable is pretty straight forward, but you want to make sure you know what you are doing if you shorten the USB-cable as well.

Hope this is what you needed =)

I found this very useful for my report on Time-of-Flight cameras, which included a short comparison to the Kinect camera. Thanks a lot!

Glad to hear that. Feedback is awesome.

I’d be interested in reading your report about Time-of-Flight, if you decide to share your work with the world. In that case, please let me know where i can find it.

It’s not written in English, so I doubt you would understand a word^^ Thanks for your interest though.